transformers

作者|huggingface

编译|VK

来源|Github

Transformers是TensorFlow 2.0和PyTorch的最新自然语言处理库

Transformers(以前称为pytorch-transformers和pytorch-pretrained-bert)提供用于自然语言理解(NLU)和自然语言生成(NLG)的最先进的模型(BERT,GPT-2,RoBERTa,XLM,DistilBert,XLNet,CTRL …) ,拥有超过32种预训练模型,支持100多种语言,并且在TensorFlow 2.0和PyTorch之间具有深厚的互操作性。

特性

- 与pytorch-transformers一样易于使用

- 像Keras一样强大而简洁

- 在NLU和NLG任务上具有高性能

- 教育者和从业者进入的门槛低

面向所有人的最新NLP架构

– 深度学习研究人员

– 练习实践学习人员

– AI/ML/NLP教师和教育者

降低计算成本

– 研究人员可以共享训练好的模型,而不必总是再训练

– 从业人员可以减少计算时间和生产成本

– 具有30多种预训练模型的10种架构,其中一些采用100多种语言

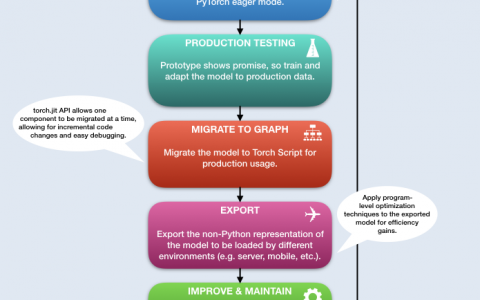

为模型生命周期的每个部分选择合适的框架

– 3行代码训练最先进的模型

– TensorFlow 2.0和PyTorch模型之间的深层互操作性

– 在TF2.0/PyTorch框架之间随意迁移模型

– 无缝选择合适的框架进行训练,评估和生产

目录

该库当前包含以下模型的PyTorch和Tensorflow实现,预训练模型权重,用法脚本和一些转换实用程序:

-

BERT (来自Google) 源自论文BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding 》,作者:Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova.

-

GPT (来自OpenAI) 源自论文《Improving Language Understanding by Generative Pre-Training 》,作者:Alec Radford, Karthik Narasimhan, Tim Salimans and Ilya Sutskever.

-

GPT-2 (来自OpenAI) 源自论文《Language Models are Unsupervised Multitask Learners 》,作者:Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei and Ilya Sutskever.

-

Transformer-XL (来自Google/CMU) 源自论文《Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context 》,作者:Zihang Dai, Zhilin Yang, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov.

-

XLNet (来自Google/CMU) 源自论文《XLNet: Generalized Autoregressive Pretraining for Language Understanding 》,作者:Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Carbonell, Ruslan Salakhutdinov, Quoc V. Le.

-

XLM (来自Facebook) 源自论文《Cross-lingual Language Model Pretraining 》,作者:Guillaume Lample and Alexis Conneau.

-

RoBERTa (来自Facebook), 源自论文《a Robustly Optimized BERT Pretraining Approach 》,作者:Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov.

-

DistilBERT (来自HuggingFace) 源自论文《DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter 》,作者:Victor Sanh, Lysandre Debut and Thomas Wolf. The same method has been applied to compress GPT2 into DistilGPT2.

-

CTRL (来自Salesforce), 源自论文《CTRL: A Conditional Transformer Language Model for Controllable Generation 》,作者:Nitish Shirish Keskar, Bryan McCann, Lav R. Varshney, Caiming Xiong and Richard Socher.

-

CamemBERT (来自FAIR, Inria, Sorbonne Université) 源自论文《CamemBERT: a Tasty French Language Model 》,作者:Louis Martin, Benjamin Muller, Pedro Javier Ortiz Suarez, Yoann Dupont, Laurent Romary, Eric Villemonte de la Clergerie, Djame Seddah, and Benoît Sagot.

-

ALBERT (来自Google Research), 源自论文《a ALBERT: A Lite BERT for Self-supervised Learning of Language Representations 》,作者:Zhenzhong Lan, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, Radu Soricut.

-

XLM-RoBERTa (来自Facebook AI), 源自论文《Unsupervised Cross-lingual Representation Learning at Scale 》,作者:Alexis Conneau, Kartikay Khandelwal, Naman Goyal, Vishrav Chaudhary, Guillaume Wenzek, Francisco Guzmán, Edouard Grave, Myle Ott, Luke Zettlemoyer and Veselin Stoyanov.

-

FlauBERT (来自CNRS) 源自论文《FlauBERT: Unsupervised Language Model Pre-training for French 》,作者:Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab.

目录

- 安装

- pip安装

- 从源安装

- 测试

- OpenAI GPT原始标记分析工作流

- 模型下载注意事项(持续集成或大规模部署)

- 您想在移动设备上运行Transformer模型吗?

- 快速入门

- 理念

- 主要概念

- 快速入门:使用

- 术语表

- 输入id

- 注意力掩码

- 词语类型id

- 位置id

- 预训练模型

- 模型上传与共享

- 例子

- TensorFlow 2.0 Bert 在GLUE上的测评

- 语言模型训练

- 语言生成

- GLUE

- 选择题

- SQuAD

- XNLI

- MM-IMDb

- 模型性能的对抗评估

- Jupyter Notebooks

- 加载谷歌AI或OpenAI预训练权重或PyTorch转储

from_pretrained()方法- 缓存目录

- 序列化实践

- 转换Tensorflow检查点

- BERT

- OpenAI GPT

- OpenAI GPT-2

- Transformer-XL

- XLNet

- XLM

- 从pytorch-pretrained-bert迁移

- 模型总是输出元组

- 序列化

- 优化器:BertAdam和OpenAIAdam现在改成AdamW,schedules是标准的PyTorch schedules

- BERTology

- TorchScript

- 隐式

- 在Python中使用TorchScript

- 多语言模型

- BERT

- 伯特

- 基准

- 对所有模型进行推理基准测试

- 带混合精度, XLA,分布的TF2(@tlkh)

主要的类

- Configuration

- PretrainedConfig

- Models

- PreTrainedModel

- TFPreTrainedModel

- Tokenizer

- PreTrainedTokenizer

- Optimizer

- AdamW

- AdamWeightDecay

- Schedules

- Learning Rate Schedules

- Warmup

- Gradient Strategies

- GradientAccumulator

- Processors

- Processors

- GLUE

- XNLI

- SQuAD

包引用

- AutoModels

- AutoConfig

- AutoTokenizer

- AutoModel

- AutoModelForPreTraining

- AutoModelWithLMHead

- AutoModelForSequenceClassification

- AutoModelForQuestionAnswering

- AutoModelForTokenClassification

- BERT

- Overview

- BertConfig

- BertTokenizer

- BertModel

- BertForPreTraining

- BertForMaskedLM

- BertForNextSentencePrediction

- BertForSequenceClassification

- BertForMultipleChoice

- BertForTokenClassification

- BertForQuestionAnswering

- TFBertModel

- TFBertForPreTraining

- TFBertForMaskedLM

- TFBertForNextSentencePrediction

- TFBertForSequenceClassification

- TFBertForMultipleChoice

- TFBertForTokenClassification

- TFBertForQuestionAnswering

- OpenAI GPT

- Overview

- OpenAIGPTConfig

- OpenAIGPTTokenizer

- OpenAIGPTModel

- OpenAIGPTLMHeadModel

- OpenAIGPTDoubleHeadsModel

- TFOpenAIGPTModel

- TFOpenAIGPTLMHeadModel

- TFOpenAIGPTDoubleHeadsModel

- Transformer XL

- Overview

- TransfoXLConfig

- TransfoXLTokenizer

- TransfoXLModel

- TransfoXLLMHeadModel

- TFTransfoXLModel

- TFTransfoXLLMHeadModel

- OpenAI GPT2

- Overview

- GPT2Config

- GPT2Tokenizer

- GPT2Model

- GPT2LMHeadModel

- GPT2DoubleHeadsModel

- TFGPT2Model

- TFGPT2LMHeadModel

- TFGPT2DoubleHeadsModel

- XLM

- Overview

- XLMConfig

- XLMTokenizer

- XLMModel

- XLMWithLMHeadModel

- XLMForSequenceClassification

- XLMForQuestionAnsweringSimple

- XLMForQuestionAnswering

- TFXLMModel

- TFXLMWithLMHeadModel

- TFXLMForSequenceClassification

- TFXLMForQuestionAnsweringSimple

- XLNet

- Overview

- XLNetConfig

- XLNetTokenizer

- XLNetModel

- XLNetLMHeadModel

- XLNetForSequenceClassification

- XLNetForTokenClassification

- XLNetForMultipleChoice

- XLNetForQuestionAnsweringSimple

- XLNetForQuestionAnswering

- TFXLNetModel

- TFXLNetLMHeadModel

- TFXLNetForSequenceClassification

- TFXLNetForQuestionAnsweringSimple

- RoBERTa

- RobertaConfig

- RobertaTokenizer

- RobertaModel

- RobertaForMaskedLM

- RobertaForSequenceClassification

- RobertaForTokenClassification

- TFRobertaModel

- TFRobertaForMaskedLM

- TFRobertaForSequenceClassification

- TFRobertaForTokenClassification

- DistilBERT

- DistilBertConfig

- DistilBertTokenizer

- DistilBertModel

- DistilBertForMaskedLM

- DistilBertForSequenceClassification

- DistilBertForQuestionAnswering

- TFDistilBertModel

- TFDistilBertForMaskedLM

- TFDistilBertForSequenceClassification

- TFDistilBertForQuestionAnswering

- CTRL

- CTRLConfig

- CTRLTokenizer

- CTRLModel

- CTRLLMHeadModel

- TFCTRLModel

- TFCTRLLMHeadModel

- CamemBERT

- CamembertConfig

- CamembertTokenizer

- CamembertModel

- CamembertForMaskedLM

- CamembertForSequenceClassification

- CamembertForMultipleChoice

- CamembertForTokenClassification

- TFCamembertModel

- TFCamembertForMaskedLM

- TFCamembertForSequenceClassification

- TFCamembertForTokenClassification

- ALBERT

- Overview

- AlbertConfig

- AlbertTokenizer

- AlbertModel

- AlbertForMaskedLM

- AlbertForSequenceClassification

- AlbertForQuestionAnswering

- TFAlbertModel

- TFAlbertForMaskedLM

- TFAlbertForSequenceClassification

- XLM-RoBERTa

- XLMRobertaConfig

- XLMRobertaTokenizer

- XLMRobertaModel

- XLMRobertaForMaskedLM

- XLMRobertaForSequenceClassification

- XLMRobertaForMultipleChoice

- XLMRobertaForTokenClassification

- TFXLMRobertaModel

- TFXLMRobertaForMaskedLM

- TFXLMRobertaForSequenceClassification

- TFXLMRobertaForTokenClassification

- FlauBERT

- FlaubertConfig

- FlaubertTokenizer

- FlaubertModel

- FlaubertWithLMHeadModel

- FlaubertForSequenceClassification

- FlaubertForQuestionAnsweringSimple

- FlaubertForQuestionAnswering

- Bart

- BartModel

- BartForMaskedLM

- BartForSequenceClassification

- BartConfig

- Automatic Creation of Decoder Inputs

原创文章,作者:pytorch,如若转载,请注明出处:https://pytorchchina.com/2020/02/29/transformers-%e7%ae%80%e4%bb%8b%ef%bc%9atransformers%e6%98%aftensorflow-2-0%e5%92%8cpytorch%e7%9a%84%e6%9c%80%e6%96%b0%e8%87%aa%e7%84%b6%e8%af%ad%e8%a8%80%e5%a4%84%e7%90%86%e5%ba%93/